Foreign Investment Nomura Securities Report pointed out that with the rapid development of generative AI and large language models (LLM), global data centers are accelerating the upgrade of AI network infrastructure. In June 2025, Broadcom announced the launch of the Tomahawk 6 (TH6) exchange chip to launch a new AI network equipment performance revolution.

Broadcom TH6 uses a 3-nanometer process, supports 200G SerDes, and has a 102.4Tbps exchange capacity, which is twice that of traditional Ethernet chips. This chip not only supports Scale-Up and Scale-Out, but also has the function of integrating Co-packaged Optics (CPO) optical modules, which can significantly reduce power consumption and delays and improve the flexibility and efficiency of AI collections.

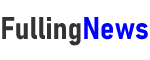

▲ Data center directional expansion and directional expansion.

▲ Data center directional expansion and directional expansion.

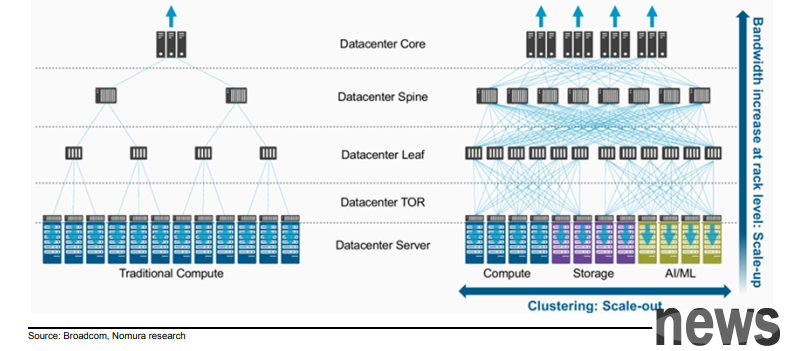

AI network development, Scale-Out and 0 Scale-Up form dual-axis drive. Scale-Out emphasizes high-speed communication between AI server nodes, and is often based on InfiniBand directed by Ethernet or NVIDIA. In order to challenge InfiniBand's market advantages, UEC standards promoted by Ultra Ethernet Consortium are rapidly becoming commercialized. At the same time, Google's self-developed Optical Circuit Switch (OCS) also demonstrates more than 30% energy efficiency and frequency width advantages.

Scale-Up layer, NVIDIA continues to develop NVLink Fusion, combines high-speed point-to-point communication between GPUs, and opens up partners such as Joint Development, Marvell, Astera Labs, etc. to jointly research. Broadcom has launched Scale-Up Ethernet (SUE), which can also achieve 1024 XPU connections in a single layer network. AMD uses Infinity Fabric to create an efficient internal communication network, suitable for large-scale computing and streaming tasks.

TH6 optimizes AI training and recommendations, and Scale-Up single chip supports 512 XPU connections, 7 times that of the same industry. The two-layer Clos architectures can support 100,000 XPU stages and reduce module quantity and wiring complexity by 67%, greatly reducing power consumption and cost. TH6 supports Cognitive Routing 2.0, integrating global load balance, dynamic congestion control and best routing for AI missions.

When competing with Broadcom, Cisco and NVIDIA also stepped up their pace. Cisco's SiliconOne G200 is 5 nanometer process and has 51.2Tbps switching capability. NVIDIA will launch the Spectrum X800 platform and is expected to release the Photonic CPO version in 2026, supporting RoCEv3 and BlueField-3 super NIC online unloading.

▲ Deployment of Ethernet and InfiniBand in large data centers.

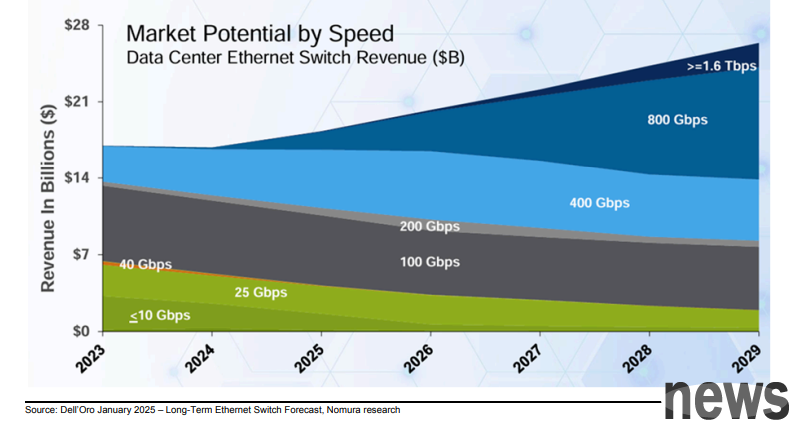

Survey Institution LightCounting predicts that the annual synthesis rate (CAGR) of CPO switches will reach 32% between 2023 and 2028, which is better than 14% and 24% of Ethernet and InfiniBand switches. In addition, the 800G switch will surpass 400G by 2027 and become the mainstream of data centers. Even IDC's research data shows that China's exchanger market grew by 5.9% in 2024, with the growth rate of data center equipment reaching 23.3%, highlighting the driving force of AI-based equipment construction.

In terms of AI applications, OpenAI's daily active users (DAU) exceeded 110 million in June, continuing to lead the world. The Chinese market has been rising from "Doubao" owned by Ziyi Dance, with DAU reaching 37 million in early June, surpassing DeepSeek. The daily use of bean bags reaches 10.8 minutes, indicating that their user adhesion and application depth are constantly improving. Therefore, in order to maintain its market leadership position, OpenAI also released a new generation model, o3-pro in June, which emphasizes the ability to extend reasoning and integrate tools, with a price of US$20 and US$80 per million input/output tokens respectively. In response to the demand of the enterprise market, OpenAI has also reduced the price of the basic version of o3 by 80%, which will greatly promote the popularization of AI models.

▲ Data center Ether network exchanger is divided into collection status according to speed zone.

▲ Data center Ether network exchanger is divided into collection status according to speed zone.

In addition to technological development, AI industry investment and events continue to be hot. NVIDIA VivaTech has published the Cosmos Predict-2 integrated platform for autopilot models and quantum computing, and announced the establishment of an AI cloud factory with French Mistral AI. AMD has released the Instinct MI350 GPU series, which has a 4-fold increase in AI computing power compared to previous generations and supports 520 billion parameter models. Therefore, in response to future market demand, the AI exchanger market is expected to develop towards the direction of white-box and high-standard chip-oriented guidance. White-branded equipment such as Arista and Accton continue to expand, and in 2024, Arista has surpassed Cisco to become the first in the market for high-efficiency exchangers. Broadcom, Cisco, and NVIDIA are the three strongholds, namely Tomahawk 6, SiliconOne G200 and Spectrum X800, respectively, to compete for high-level exchange chips.